The exponential growth of generative AI has propelled a wave of productivity enhancements across nearly every sector and industry. From streamlining creative processes to facilitating complex problem-solving, generative AI is opening up countless new ways for enterprises to operate and innovate faster and more efficiently. While productivity gains are real and measurable, it is essential to understand the risks associated with deploying generative AI, and how to best mitigate their impact.

Understanding the Data Challenges

There are two primary data concerns associated with the usage of generative AI. The first challenge relates to the nature by which these tools operate. At their core, generative AI tools are powered by Large Language Models (LLMs). These models operate by processing and analyzing vast datasets, enabling them to discern patterns among words and phrases, subsequently generating contextually appropriate responses. LLMs rely on shared data for refinement and optimization, meaning any data that gets submitted via a prompt can be used to train the models going forward. This is particularly problematic when sensitive or protected data is shared with a generative AI model.

The second major data concern focuses on the tracking and logging of information that is exchanged with generative AI tools. As these models get deployed, organizations can find themselves in possession of highly personal customer or employee information, which if not managed appropriately, can put them out of compliance with HIPAA or other data protection regulations.

These data challenges give rise to three significant risk categories that organizations must address when dealing with generative AI.

Regulatory Compliance Risk

Regulatory compliance risk primarily revolves around the potential for regulated data to be either leaked outside an organization's boundaries, or inappropriately stored and accessed internally. Personally identifiable information (PII), protected health information (PHI), payment card industry (PCI) information, and other compliance-defined data types exist in abundance in every organization, regardless of industry. The inadvertent sharing of such highly sensitive information can lead to severe legal and punitive consequences, resulting in violations of data protection regulations like HIPAA, CCPA, GDPR, and others. The unauthorized disclosure of PII, PHI, and PCI data not only triggers significant fines and penalties but also breaches compliance requirements, which erodes consumer trust and confidence.

Sensitive Data and IP Risk

Perhaps the most prevalent concern within organizations regarding generative AI, sensitive data and IP risk centers on the threat of intellectual property (IP) or other proprietary data leakage. Considering that IP assets typically constitute over 65% of the total value of Fortune 500 companies, this apprehension is well-founded. In 2023, Samsung encountered three separate instances in a single month where employees inadvertently shared trade secrets with OpenAI’s LLMs, leading to the dissemination of highly valuable and confidential company information beyond Samsung's control. Regardless of size, IP represents a substantial portion of the value of any organization, and needs to be protected.

Reputational Risk

Reputational risk in the context of generative AI is focused on the concern that models may ingest or output offensive, discriminatory, or derogatory language. The possibility of association with hate speech, racism, sexism, ageism, or any other forms of socially unacceptable behavior poses a significant threat to the brand's integrity and public perception.

A Layered Security Approach to Managing These Risks

Deploying generative AI comes with tangible risks, and organizations must establish a comprehensive security posture to safeguard critical data. A multi-layered approach that integrates policy, process, and technology is paramount to fostering a secure environment.

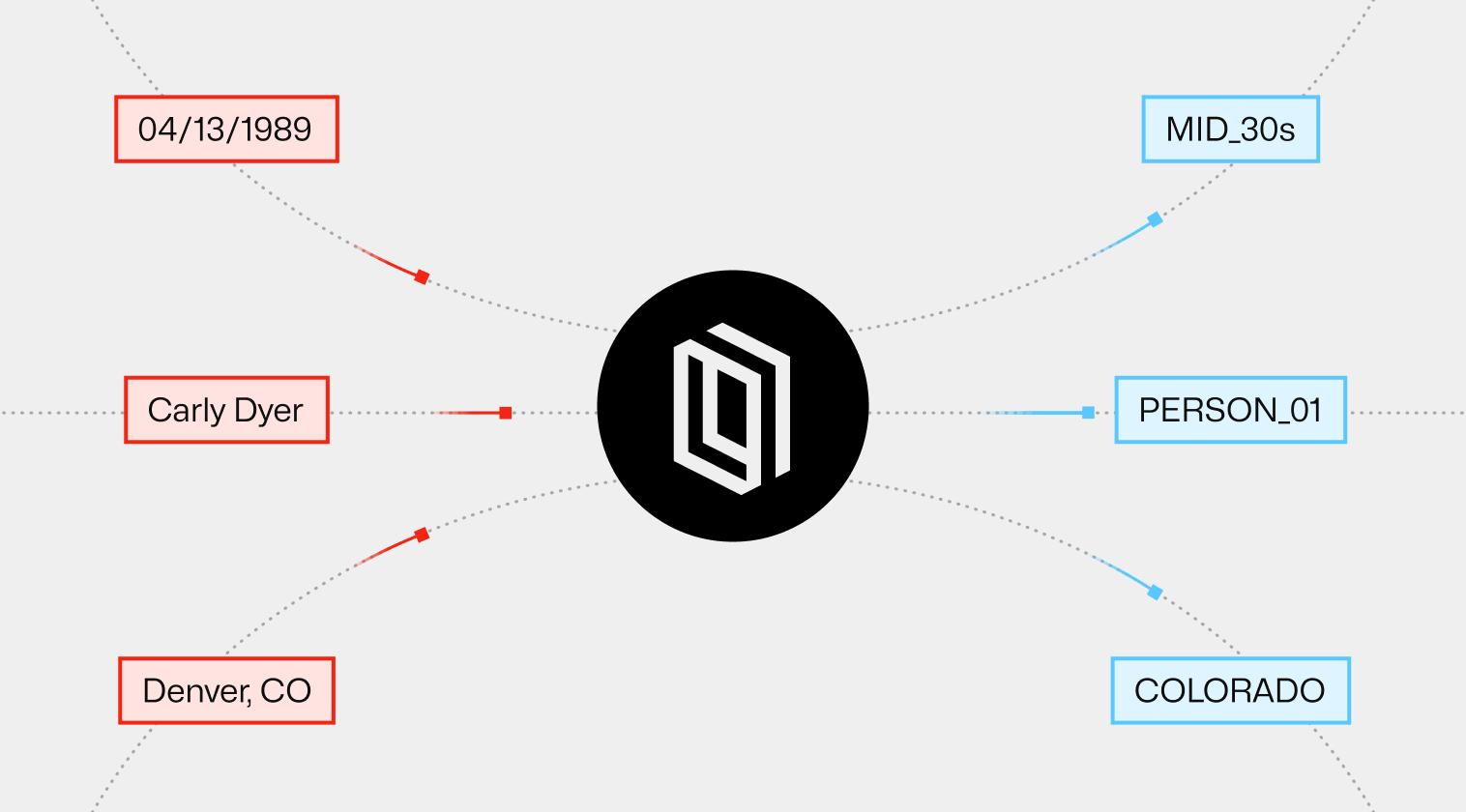

Liminal is the technology security layer for organizations looking to leverage generative AI. With Liminal, CISOs have complete control over the data submitted to large language models (LLMs). Whether that be through direct interactions, via AI-enabled applications built in-house, or through the consumption of off-the-shelf software with gen AI capabilities, Liminal ensures organizations are protected from regulatory compliance risk, data security risk, and reputational risk.

Learn more about horizontal security for all generative AI from Liminal.