Multi-Model AI Platforms vs. Single-Provider Solutions: Which Is Right for Your Enterprise?

As enterprises race to adopt generative AI, one of the most consequential decisions leaders face is whether to standardize on a single AI provider or implement a multi-model platform approach. This choice affects everything from operational flexibility and cost structure to data security, vendor risk, and long-term innovation capacity.

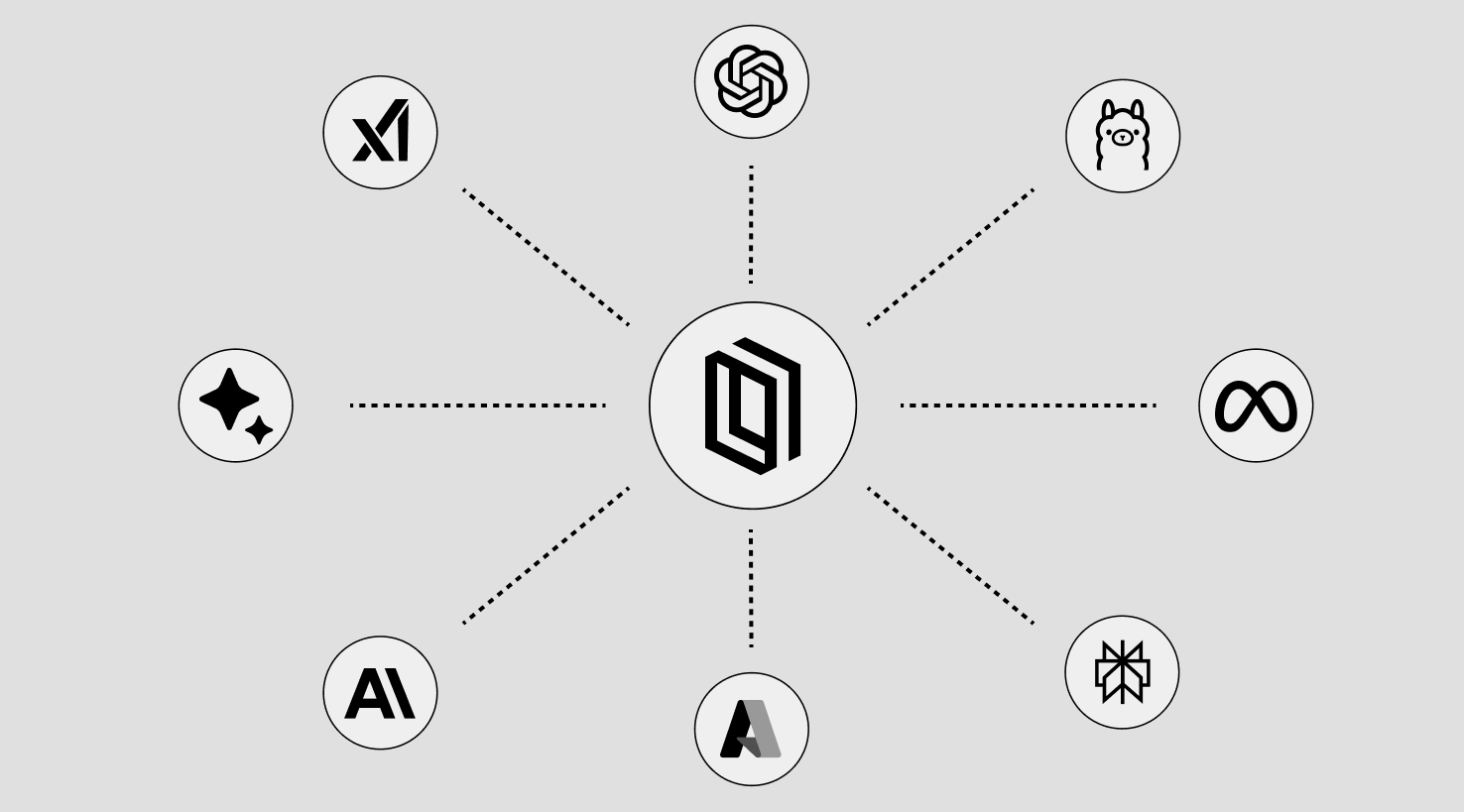

What is a multi-model AI platform? A multi-model AI platform provides enterprises with unified access to multiple large language models from providers like OpenAI, Anthropic, Google, and Meta through a single interface, enabling model flexibility, centralized governance, and compliance capabilities that single-provider solutions cannot deliver.

Rather than committing to a single vendor's technology stack, organizations using this approach can leverage the most suitable model for each task at hand—whether that means prioritizing reasoning depth, speed, cost efficiency, personal preference, or domain-specific capabilities. This architectural flexibility becomes especially valuable as model capabilities evolve rapidly and business needs diversify. Critically, enterprise-grade multi-model platforms layer in centralized security controls, audit trails, data loss prevention, and policy enforcement—transforming what could be a fragmented collection of API connections into a governed, compliant AI infrastructure that meets regulatory and risk management standards.

This comprehensive comparison examines both approaches across critical dimensions—flexibility, cost, security, governance, and strategic risk—to help you make an informed platform decision aligned with your organization's needs and constraints.

What you'll learn:

- Core differences between multi-model and single-provider architectures

- Why data security and governance separate basic from enterprise-grade platforms

- When each approach makes strategic sense

- How to evaluate platforms against your requirements

- Real-world use cases and implementation considerations

What Is a Single-Provider AI Solution?

A single-provider AI solution means an organization standardizes on one vendor's AI offerings—typically through an enterprise agreement or dedicated deployment. Common examples include OpenAI's ChatGPT Enterprise, Microsoft Copilot, Google's Gemini Enterprise, or Anthropic's Claude for Enterprise.

Typical Characteristics

Vendor-Specific Ecosystem

Users access AI capabilities exclusively through one provider's tools, interfaces, and APIs. The organization negotiates a single enterprise contract, implements that vendor's security and compliance framework, and trains users on one platform. All AI interactions happen within that vendor's ecosystem.

Simplified Initial Procurement

For organizations already invested in a vendor's broader ecosystem—such as Microsoft 365 users adopting Copilot or Google Workspace customers using Gemini Enterprise—native integration can accelerate initial deployment.

Provider-Specific Capabilities and Limitations

Organizations access only the models and features that specific provider offers. If that vendor's models excel at certain tasks but underperform at others, users must work within those constraints. Feature availability, model updates, and capability roadmaps depend entirely on one vendor's development priorities and business decisions. There's no ability to leverage superior models from other providers for specific use cases without implementing separate solutions outside the primary platform.

Limited Governance Flexibility

Single-provider platforms implement only the governance and security controls that vendor chooses to offer. If your organization's requirements exceed the provider's capabilities—more granular access controls, specific audit trail formats, custom policy enforcement, integration with existing security infrastructure—gaps may be difficult or impossible to close without vendor cooperation. Organizations are dependent on the provider's roadmap and priorities for governance enhancements.

Vendor Lock-In and Dependency

Deep integration with one vendor creates switching costs and strategic dependency. Changing providers requires re-implementing integrations, retraining users, renegotiating contracts, and potentially rewriting applications built on provider-specific APIs or features. This dependency limits negotiating leverage and flexibility if the vendor changes pricing, terms, capabilities, or experiences service quality issues.

Direct Data Exposure to Provider

With single-provider solutions, organizational data flows directly to that AI vendor's infrastructure. While enterprise agreements may include data protection commitments, organizations trust one vendor to handle sensitive information appropriately, store it securely, and honor contractual terms. If that provider experiences a security incident, changes data handling practices, or faces legal challenges, data exposure is concentrated with one party—and organizations have limited recourse beyond the terms of their contract.

Single-provider solutions may work for organizations with narrow AI use cases or those prioritizing initial simplicity over long-term flexibility. However, this approach fundamentally trades strategic flexibility, governance control, and provider independence for perceived short-term operational convenience.

What Is a Multi‑Model AI Platform?

A multi‑model AI platform provides access to AI models from multiple providers through a unified interface. Rather than locking an organization into one vendor's ecosystem, a multi‑model approach lets teams select the best model for each task—balancing capability, cost, performance, and compliance through effective LLM orchestration.

This approach represents the next step in enterprise AI maturity: flexibility without sacrificing control. Yet not all multi‑model platforms are equal. Every solution provides certain foundational capabilities, but only enterprise‑grade versions extend them with the governance, security, and observability that regulated industries require for safe, compliant AI use.

Foundational Capabilities of Multi‑Model Platforms

Unified Access Layer

Users interact with a single interface—application, API, or embedded workflow—to access models from different providers. The platform manages authentication, routing, and performance behind the scenes, ensuring consistent experience across all AI usage.

Model Selection and Routing

Organizations can match each use case to the optimal model based on its strengths, cost, and compliance requirements. LLM orchestration capabilities mean users have the ability to manually choose models or allow automated routing based on predefined rules and policies.

Provider Flexibility and Independence

By design, multi‑model platforms reduce dependency on any single vendor. If one provider experiences downtime or pricing changes, workloads shift smoothly to alternatives. This flexibility gives enterprises leverage in pricing and long‑term roadmap control.

Most multi‑model platforms deliver these fundamentals, providing organizations with convenience and choice. Enterprise‑grade multi‑model platforms, however, go further—adding the critical layer of governance, security, and observability that enables safe, compliant AI at scale.

Enterprise‑Grade Multi‑Model Platforms

Advanced enterprise platforms build on the foundational capabilities of unified access, routing, and flexibility by introducing deep governance, security, and visibility designed for enterprise scale and accountability.

- Centralized policy enforcement and governance — Define acceptable‑use, compliance, and data‑handling rules once and apply them consistently across all AI providers.

- Sensitive data protection — Prevent exposure of confidential, personal, or regulated information before it leaves corporate boundaries.

- Role‑based access control (RBAC) — Align model access and capability levels to roles, responsibilities, and expertise so teams can safely leverage the right tools for their work while maintaining oversight.

- Comprehensive audit logging — Maintain transparent records of model activity that support compliance reporting, operational insight, and continuous improvement.

- Observability and insights — Gain up‑to‑the‑minute visibility into how AI is being leveraged across the organization to identify adoption patterns, uncover new opportunities, and refine outcomes.

Why Governance‑Enabled Multi‑Model Platforms Matter

Enterprises—particularly those in financial services, healthcare, legal, public‑sector, and other regulated environments—must maintain strict control over data flow and accountability. Governance‑enabled multi‑model platforms make that possible by enforcing consistent policies independent of which AI provider handles a request and by maintaining a transparent, auditable chain of custody for every AI interaction.

In contrast, single‑provider and basic aggregator solutions may deliver convenience but often obscure critical details, such as where user data is stored or how long vendor systems retain prompts. Enterprise multi‑model platforms fill this oversight gap, establishing the platform itself—not the external model vendors—as the authoritative governance boundary for secure enterprise AI.

The Data Security Reality of AI Model Providers

Even as major AI providers enhance their privacy options and enterprise offerings, significant transparency gaps remain around how user data is stored, accessed, and potentially reused. These realities highlight why governance‑enabled multi‑model platforms are critical for enterprises handling sensitive information.

Prompt Data Storage and Retention

AI providers vary widely in how long they keep user data—and most store prompts and interactions far longer than many enterprises realize. According to Anthropic's privacy documentation, Claude's parent company retains certain customer data for up to five years to meet operational and legal needs. While large providers typically offer enterprise contracts with shorter retention windows or data‑deletion options, default service terms often keep user inputs well beyond what regulated organizations can justify.

For enterprises, this means data entered into external AI systems can persist long after projects end or employees leave, creating long‑term exposure risk unless governance controls prevent sensitive information from being shared in the first place.

Data Exposure and Provider Risk

Even if a model provider promises confidentiality, actual safeguards vary dramatically. A 2025 Business Digital Index report found that half of AI providers fail to meet basic corporate data‑security standards, including secure transport, encryption, and access‑management protocols. Many tools also lack clear data‑deletion policies or consistent breach‑disclosure procedures.

These inconsistencies mean organizations cannot assume that AI vendors apply the same protections they expect from traditional IT or cloud partners—a gap that becomes especially problematic when employees experiment with unapproved AI tools.

Human Review of Prompts

Another overlooked vector of data exposure stems from human involvement in AI safety operations. As reported by TechCrunch, even leading providers such as OpenAI continue to route certain interactions for human review—particularly those flagged for policy violations, safety testing, or system refinement.

While these reviews are essential for improving responsible‑AI mechanisms, they also mean that organizational data, prompts, or outputs may be manually viewed by provider teams under specific circumstances. For enterprises managing confidential, regulated, or privileged information, this introduces an accountability gap that only policy‑based governance can close.

Legal Discovery and Data Access

Beyond accidental exposure, stored AI data can become legally discoverable. Recent legal developments highlight this growing risk: under a court order, OpenAI was compelled to provide chat records as part of an ongoing investigation. The case demonstrated that user prompts, outputs, and metadata—if retained—can be subpoenaed or otherwise demanded by courts or regulators.

For legal, healthcare, or financial institutions, this means that sensitive client data shared with third-party AI services could later be pulled into discovery or regulatory reviews, creating liability and reputational exposure.

Why These Risks Matter

Across these scenarios—from years‑long data retention and variable security maturity to human review and legal discoverability—one conclusion is clear: enterprises cannot fully control their data once it reaches a public or single‑vendor AI service.

Governance‑enabled multi‑model platforms solve this by creating a controlled intermediary layer. Sensitive‑data protection capabilities prevent proprietary or regulated information from leaving corporate boundaries, while centralized policies and auditability ensure compliance obligations are met before any interaction occurs with an external model provider.

Discover how to build comprehensive governance frameworks for enterprise AI in our Complete Guide to Enterprise AI Governance.

Choosing Between Single-Provider and Multi-Model Platforms

When Organizations Default to Single-Provider Solutions

Some organizations adopt single-provider AI approaches, though these decisions often reflect organizational inertia rather than strategic advantage:

Existing Vendor Relationships

Organizations already deeply embedded in a specific ecosystem—such as Microsoft 365 users automatically adopting Copilot or Google Workspace customers defaulting to Gemini Enterprise—may choose the path of least procurement friction. However, this convenience often comes at the cost of flexibility, governance control, and long-term strategic positioning.

Perceived Simplicity

Decision-makers sometimes assume that standardizing on one provider reduces complexity. In practice, single-provider limitations—model performance gaps, governance inflexibility, vendor dependency—often create more operational complexity over time as organizations struggle to work around constraints or implement shadow AI solutions.

Limited AI Maturity

Organizations in very early stages of AI exploration may not yet understand the diversity of use cases they'll eventually need to support. Single-provider deployments can serve as temporary learning environments, though most organizations quickly outgrow these constraints as AI adoption scales.

It's important to recognize that multi-model platforms are equally straightforward to deploy—modern enterprise platforms provide unified interfaces, single sign-on integration, and streamlined onboarding that match or exceed single-provider ease of use, while delivering significantly greater strategic value.

When Multi-Model Platforms Are the Strategic Choice

For most enterprises, multi-model platforms—particularly those with integrated governance and security—represent the optimal approach:

Understanding and Discovering Use Cases

Most organizations don't have a complete picture of how AI will be used across their enterprise when they begin adoption. Multi-model platforms with observability and insights capabilities let you see how different teams actually use AI, which models perform best for which tasks, and where new opportunities emerge. This visibility enables data-driven decisions about AI strategy rather than guessing based on vendor marketing. Whether you're just starting your AI journey or scaling existing deployments, the ability to understand real usage patterns is invaluable for maximizing value and identifying opportunities.

Regulated Industries and Sensitive Data

Financial services, healthcare, legal, and government organizations operating under strict data protection and compliance requirements need governance-enabled platforms. The centralized policy enforcement, sensitive data protection, audit logging, and observability that enterprise multi-model platforms provide aren't available through single-provider or basic aggregator solutions. For these organizations, governance-enabled multi-model platforms aren't optional—they're foundational to compliant AI adoption.

Cost Optimization and Provider Leverage

Multi-model platforms deliver significant cost advantages over single-provider subscriptions. Enterprise multi-model platforms like Liminal cost 40-60% less than comparable single-provider enterprise agreements while providing access to multiple best-in-class models plus comprehensive security and governance capabilities. This economic advantage comes from competitive provider pricing and efficient platform infrastructure. Organizations gain superior capabilities—model flexibility, centralized governance, observability, and sensitive data protection—at a lower total cost than single-provider alternatives.

Long-Term Strategic Flexibility

AI capabilities evolve rapidly. Multi-model platforms ensure organizations can adopt superior models as they emerge without re-architecting infrastructure, renegotiating contracts, or retraining users. This flexibility protects against vendor roadmap changes, pricing adjustments, or service quality degradation—risks that single-provider approaches concentrate rather than mitigate.

Enterprise Readiness and Scale

Organizations planning to deploy AI beyond pilot projects need platforms designed for enterprise use. Enterprise multi-model platforms provide the governance, security, observability, and management capabilities required to support AI safely and efficiently across the organization. Single-provider solutions often lack the enterprise-grade controls needed for organization-wide deployment.

The Enterprise Imperative

For organizations handling sensitive data, operating under regulatory requirements, or deploying AI at scale, governance-enabled multi-model platforms represent the only viable architecture.

The choice isn't between simplicity and sophistication—modern multi-model platforms deliver both ease of use and enterprise control. The choice is between strategic flexibility with comprehensive governance versus vendor dependency with limited oversight.

As AI becomes central to competitive advantage and operational efficiency, the platform decision becomes a strategic one. Organizations that choose governance-enabled multi-model platforms position themselves to innovate safely, adapt quickly, and maintain control over their AI journey.

Learn how to implement comprehensive governance for enterprise AI in our Complete Guide to Enterprise AI Governance.

How to Evaluate Multi-Model Platforms

Not all multi-model platforms deliver equal value. Organizations evaluating options should assess capabilities across several critical dimensions to ensure the platform meets both current needs and future requirements.

Model Coverage and Provider Relationships

AI platform evaluation starts with understanding which AI providers and models the platform supports. Leading platforms offer access to major providers—OpenAI, Anthropic, Google, Meta—plus emerging and specialized models. Verify the platform maintains direct relationships with providers rather than relying on indirect access that could introduce latency, reliability issues, or contractual complications.

Ask specific questions: Which models are available today? How quickly does the platform add new models as they're released? Can you access different model versions (e.g., GPT-4, GPT-4 Turbo, GPT-4o)? Does the platform support both proprietary and open-source models?

Organizations with specialized needs—multilingual capabilities, domain-specific models, or compliance requirements—should confirm the platform supports models optimized for those use cases.

Governance and Security Capabilities

This is where platforms differentiate most significantly. Basic aggregators provide model access; enterprise platforms also provide control.

Essential governance capabilities include:

Policy Enforcement: Can you define acceptable use policies, data handling rules, and compliance requirements that apply consistently across all models? Does the platform enforce these policies automatically, or do they depend on user compliance?

Sensitive Data Protection: How does the platform prevent confidential information from reaching external providers? Look for capabilities that detect and block sensitive data patterns—financial information, personal identifiers, proprietary content—before prompts leave your environment.

Audit and Compliance: What gets logged? Leading platforms capture comprehensive records: user identity, timestamp, model accessed, prompts submitted (with sensitive data redacted), outputs generated, and actions taken. Verify logs are tamper-proof, retained according to your requirements, and exportable in formats compatible with your compliance and security tools.

Access Controls: Does the platform integrate with your identity provider (Okta, Azure AD, Ping) for single sign-on and multi-factor authentication? Can you implement role-based access control that aligns model access with job functions and responsibilities?

Observability and Insights: What visibility does the platform provide into AI usage across your organization? Look for dashboards showing adoption patterns, model performance, emerging use cases, and anomaly detection that helps identify both opportunities and risks.

For regulated industries, verify the platform supports specific compliance frameworks relevant to your sector—SOC 2, ISO 27001, GDPR, HIPAA, or industry-specific requirements.

Performance and Reliability

Assess both technical performance and operational reliability:

Latency: Does the platform introduce significant delay compared to accessing providers directly? Well-architected platforms add minimal overhead—typically milliseconds—through efficient routing and caching strategies.

Availability: What are the platform's uptime commitments and historical performance? Look for SLAs of 99.9% or higher. Verify the platform has redundancy and failover capabilities so that if one AI provider experiences issues, workloads can shift to alternatives without service interruption.

Scalability: Can the platform support your organization's growth? Evaluate whether it handles concurrent users, request volumes, and geographic distribution effectively. Ask about performance under load and how the platform scales during peak usage.

Integration and Deployment

Evaluate how the platform fits into your existing technology environment.

API and SDK Support: Does the platform provide robust APIs and SDKs for major programming languages? Can developers integrate AI capabilities into existing applications easily?

Workflow Integration: Can the platform connect with the tools your teams already use—Slack, Teams, Salesforce, custom applications? Pre-built data connectors accelerate adoption and reduce friction.

Security Tool Integration: Does the platform connect with your existing security infrastructure—SIEM systems, data loss prevention tools, identity providers, governance-risk-compliance platforms? Integration capabilities determine how well the platform fits your security operations.

Total Cost of Ownership

Look beyond subscription pricing to understand true costs. Consider:

- Platform licensing fees (per user, per organization, or usage-based)

- AI provider costs (does the platform include model access, or do you pay providers separately?)

- Implementation costs (integration, training, professional services)

- Operational costs (ongoing management, support, maintenance)

- Opportunity costs (time to value, productivity gains or losses)

Compare total cost of ownership across alternatives, not just sticker prices. Platforms that cost more initially but accelerate deployment, reduce governance overhead, or optimize model costs may deliver better economics than cheaper alternatives requiring extensive customization or manual management.

Real-World Use Cases

Multi-model platforms with enterprise governance enable safe, effective AI adoption across diverse scenarios:

Financial Services: Secure Document Analysis

A global investment bank needed to analyze thousands of regulatory filings, earnings reports, and market research documents to support investment decisions. Using a single-provider solution risked exposing confidential analysis to external AI services, while building custom infrastructure would delay deployment by months.

By implementing an enterprise multi-model platform, the bank:

- Routed complex analysis tasks to Claude for superior reasoning capabilities

- Used sensitive data protection to prevent confidential investment strategies from reaching external providers

- Maintained comprehensive audit trails demonstrating compliance with SEC and FINRA requirements

- Gave analysts access to best-in-class AI while maintaining regulatory oversight

The platform enabled the bank to accelerate research workflows while meeting stringent compliance obligations that single-provider solutions couldn't address.

Healthcare: Clinical Documentation Support

A healthcare system wanted to help physicians generate clinical documentation more efficiently without compromising patient privacy or HIPAA compliance. Direct use of consumer AI tools would violate regulations, while single-provider enterprise solutions lacked the flexibility to optimize for different documentation types.

The healthcare system deployed an enterprise multi-model platform that:

- Prevented protected health information from leaving the organization's control through sensitive data protection

- Maintained detailed audit logs supporting HIPAA compliance demonstrations

- Provided observability showing which specialties and documentation types benefited most from AI assistance

The platform enabled compliant AI adoption that improved physician efficiency while protecting patient privacy—something neither consumer tools nor single-provider solutions could deliver safely.

Legal: Contract Review and Analysis

A multinational law firm needed to accelerate contract review for clients while preserving attorney-client privilege and maintaining confidentiality obligations. Using public AI services would risk waiving privilege, while single-provider tools lacked governance features required by state bar ethics rules.

The firm implemented an enterprise multi-model platform providing:

- Sensitive data protection preventing client information from reaching external AI providers

- Role-based access controls ensuring only authorized attorneys accessed AI capabilities

- Comprehensive audit trails documenting how AI assisted in legal work

- Model flexibility allowing attorneys to use optimal models for different contract types

The platform enabled the firm to leverage AI productivity gains while meeting professional responsibility obligations—addressing concerns that prevented many legal organizations from adopting AI.

Common Misconceptions

"Multi-Model Platforms Are Too Complex"

Reality: Modern enterprise multi-model platforms provide unified interfaces as simple as single-provider tools. Users interact with one application, one API, or one workflow integration—the platform handles model routing and provider management behind the scenes. Complexity is abstracted away from users while giving administrators powerful governance controls.

"Single-Provider Solutions Are More Secure"

Reality: Security depends on governance capabilities, not provider count. Single-provider solutions expose data directly to that vendor with limited visibility or control. Enterprise multi-model platforms implement protective layers—sensitive data detection, policy enforcement, audit logging—that aren't available when using providers directly. The platform becomes the security boundary, providing better protection than direct provider access.

"We Can Just Build This Ourselves"

Reality: Building and maintaining multi-model infrastructure with enterprise governance requires significant ongoing investment. Organizations must:

- Integrate and maintain connections to multiple AI providers

- Build policy enforcement engines

- Implement sensitive data protection

- Create audit and compliance reporting

- Maintain security certifications

- Keep pace with rapidly evolving AI capabilities

Most organizations find that purpose-built platforms deliver faster time to value, better economics, and superior capabilities compared to custom development—letting internal teams focus on AI applications rather than infrastructure.

"Our Use Cases Don't Justify Multi-Model"

Reality: Organizations often don't discover the full range of AI applications until after deployment. Multi-model platforms with observability capabilities help identify unexpected use cases and opportunities across the organization. Starting with multi-model flexibility costs no more than single-provider approaches while preserving optionality as needs evolve.

Frequently Asked Questions

What is the main advantage of multi-model AI platforms over single-provider solutions?

Multi-model platforms provide flexibility to use the optimal AI model for each specific task while maintaining centralized governance and security controls. Organizations aren't limited by one vendor's capabilities, can shift to better models as they emerge, and avoid vendor lock-in—all while enforcing consistent policies across all AI usage.

How do multi-model platforms handle data security differently than single providers?

Enterprise multi-model platforms implement protective layers between users and external AI providers. They detect and prevent sensitive data from reaching providers, enforce organizational policies consistently, maintain comprehensive audit trails, and provide visibility into AI usage. Single-provider solutions expose data directly to that vendor with limited intermediary controls.

Are multi-model platforms more expensive than single-provider solutions?

Enterprise multi-model platforms typically cost 40-60% less than comparable single-provider enterprise agreements while providing access to multiple best-in-class models plus comprehensive governance capabilities. The economic advantage comes from competitive provider pricing and efficient platform infrastructure.

How difficult is it to switch from a single provider to a multi-model platform?

Modern multi-model platforms support straightforward migration. Most provide APIs compatible with major providers, allowing applications to transition with minimal code changes. The platform handles provider connections, authentication, and routing, simplifying what could otherwise be complex re-architecture. Organizations can often pilot multi-model platforms alongside existing solutions before full transition.

Do multi-model platforms work for small organizations or only enterprises?

Enterprise multi-model platforms are designed for organizations with governance and compliance requirements, meaning they benefit organizations of all sizes. Small and mid-sized organizations gain model flexibility, cost optimization, and built-in governance without building infrastructure themselves. The platform scales from pilot deployments to organization-wide adoption.

How do I know which models to use for different tasks?

Enterprise multi-model platforms with observability capabilities help answer this question through data. You can experiment with different models for various use cases and see which perform best. Many platforms provide intelligent routing that automatically selects appropriate models based on prompt characteristics, or you can define routing rules based on your requirements.

What happens if one AI provider has an outage?

Multi-model platforms with proper architecture provide resilience through provider diversity. If one provider experiences downtime or degraded performance, the platform can route workloads to alternative models automatically. This redundancy isn't possible with single-provider solutions, where outages directly impact all users.

How do multi-model platforms ensure compliance with regulations like GDPR or HIPAA?

Enterprise platforms implement compliance capabilities including sensitive data protection preventing regulated information from reaching external providers, comprehensive audit logging demonstrating compliance, data residency controls meeting localization requirements, and policy enforcement aligned with regulatory obligations. Leading platforms maintain certifications (SOC 2, ISO 27001) and provide compliance documentation supporting audits.

Can we use both public and private AI models through multi-model platforms?

Yes, advanced multi-model platforms support both cloud-based models from major providers and privately deployed models running in your own infrastructure. This flexibility lets organizations use public models for non-sensitive workloads while routing sensitive applications to private deployments, optimizing for both capability and control.

What should we look for in an enterprise multi-model platform vendor?

Prioritize vendors demonstrating: proven customer deployments in your industry, comprehensive governance and security capabilities (not just model access), integration with your existing security and identity infrastructure, strong support and customer success programs, financial stability and product roadmap alignment with your needs, and transparent pricing with clear total cost of ownership.

Conclusion

The choice between single-provider and multi-model AI platforms is fundamentally a strategic decision about flexibility, control, and long-term positioning. While single-provider solutions may seem simpler initially, they trade strategic optionality for perceived convenience—often creating more complexity over time as organizations work around limitations or manage shadow AI.

Enterprise multi-model platforms deliver the capabilities modern organizations need: model flexibility to use optimal AI for each task, comprehensive governance enabling safe adoption in regulated industries, cost advantages through competitive provider pricing, and strategic independence protecting against vendor dependency.

For organizations handling sensitive data, operating under compliance requirements, or deploying AI at scale, governance-enabled multi-model platforms aren't optional—they're foundational to responsible AI adoption.

Key Takeaways:

- The platform you choose determines not just which models you can access today, but how quickly you can adapt as AI capabilities evolve, how effectively you can govern AI usage across your organization, and whether you maintain strategic control over this increasingly critical technology.

- Organizations choosing enterprise multi-model platforms position themselves to innovate safely, optimize continuously, and maintain independence—essential advantages as AI becomes central to competitive differentiation and operational excellence.

Next Steps:

Ready to evaluate how a governance-enabled multi-model platform can accelerate your AI strategy while maintaining security and compliance? Liminal provides enterprise-grade multi-model access with comprehensive governance, delivering 40-60% cost savings compared to single-provider alternatives while ensuring your sensitive data stays protected.

Explore Liminal's Platform | Schedule a Demo | See Customer Stories